QnA with NVIDIA : Which NVIDIA platform should enterprises invest on, given the increased cadence of NVIDIA releasing their Data Centre products?

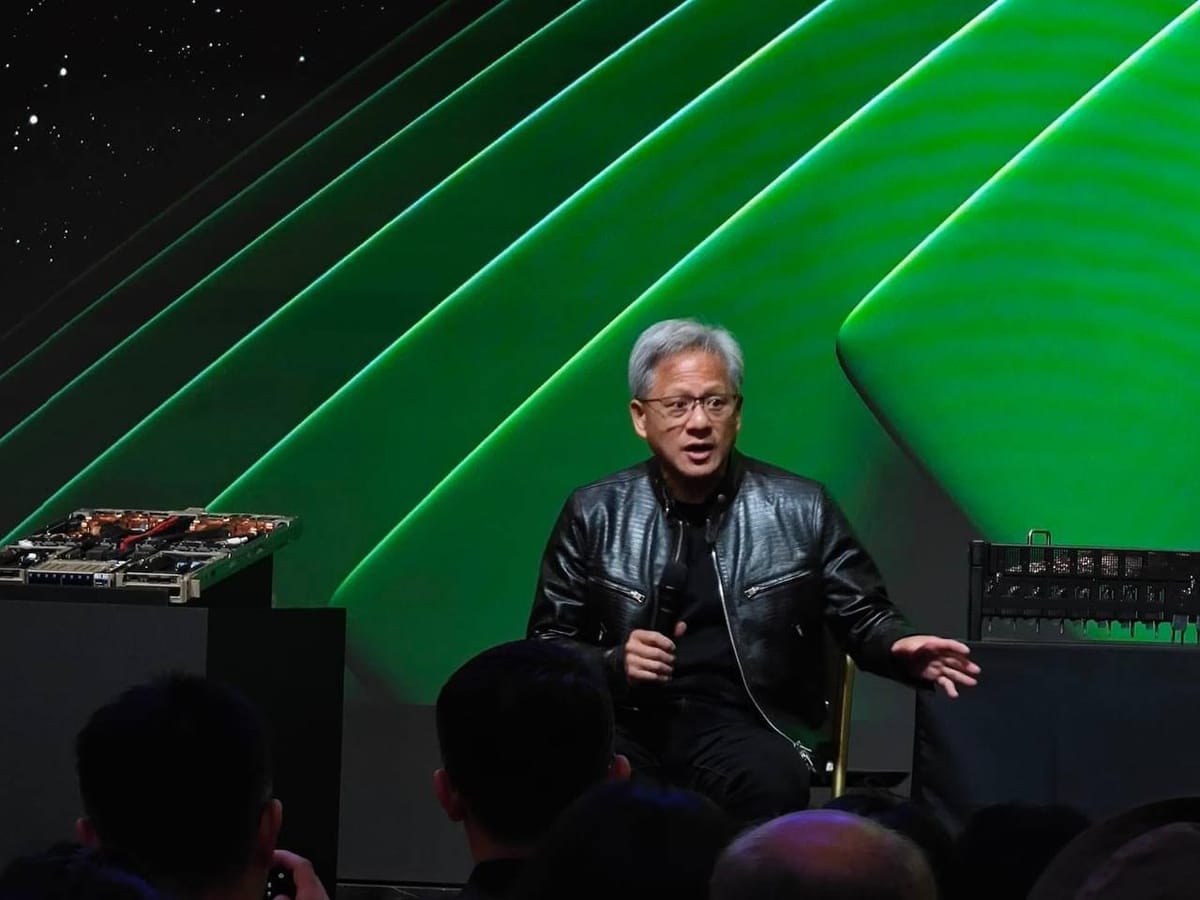

NVIDIA Founder and CEO Jensen Huang shares his thoughts and strategies on investments in an AI infrastructure to build an AI Factory.

The CTO Guide was present at NVIDIA's COMPUTEX 2024 Media Q&A.

NVIDIA just announced their new cadence and roadmap for the data center and AI Factory products at the COMPUTEX 2024 keynote. They will be releasing new products on a yearly basis, with new platforms every other two years.

Enterprises who plans to build data centres and AI Factories could be concerned. Should they wait for the next generation, which could be significantly faster and more efficient, or simply get what's available right now?

We posed this question to Founder and CEO of NVIDIA, Jensen Huang, and here's what he's got to say.

Zhi Cheng (CTO Guide):

It will be a significant investment for companies to build their own AI infrastructure. Judging that NVIDIA is moving towards a yearly cadence in releasing new products and architectures which will be more efficient and perform much better, how would you recommend enterprises who plans to build an AI Factory to choose the right product generation? Would a new generation of product make the older ones to be outdated, as they would be deemed significantly less efficient than current generation?

Jensen Huang :

Firstly, you would use build a new datacenter and use it for 4 or 5 years anyway. If you build one this year, and you build one next year,(and so on), and (you will use each of these datacenters for 4 to 5 years). Today, there is one trillion dollars worth of data centres built in the world. By 2030, another 5 years or so, it will grow to 3 trillion dollars. So let's say that every year, there will be 750 billion dollars worth of data centres which you can build.

So last year you use Hopper, this year your use Blackwell, and subsequently Blackwell Ultra, Rubin and Rubin Ultra. You wouldn't buy 4 years at 1 time, but rather 1 year at a time. That is why, the data centres in the world today has a lifetime amortization of 4 to 5 years.

The first thing we should do, is to stop building for just general purpose computing. This year's 250 billion dollars which should go to accelerated computing and generative AI.

In fact, we see the reason why NVIDIA grow so fast is because all the cloud service providers and data centers they know this. They know that the time has come to jump on accelerated computing and generative AI. General purpose computing was the last era. (General purpose computing has lasted for) 60 years (and it) is enough. Microwave ovens are not 60 years. 60 years is enough. I think it's time for us to move towards a new computing model, save energy save money, and very importantly, enable a new way of doing computing called generative AI.

(The above texts are transcribed, and may not be fully accurate of what Jensen Huang said, word for word)

Thoughts:

Companies who are building public or private data centres need to consider the imminent obsolescence of them in 4 to 5 years time, be it in terms of performance capabilities or efficiency. While Cloud Service providers who operate large scale data centres can update their hardware on a yearly basis, it only makes sense for smaller enterprises to update their hardware less frequently. Enterprise decision leaders will inevitably need to consider the worth of their investment into a new AI factory and data centre over 4 to 5 years, and make decisions with the understanding that the infrastructure will be amortized and depreciated in that period. The demand for AI compute capabilities will only grow, and depending on the expected usage and requirements of the data centre, decision makers will then have to make the call adequately along the way, before their assets become a liability.