What Klevv / Essencore’s Latest DDR5 ICs Reveal About the Future of Memory Strategy

Why 1b and 1c DRAM Nodes Matter for Your AI Infrastructure in 2025

AI infrastructure in 2025 is evolving at breakneck speed, and while much attention is placed on GPUs and accelerators, DRAM remains a foundational bottleneck or enabler. At COMPUTEX 2025, Klevv / Essencore, a subsidiary of SK Group, showcased advanced DDR5 ICs operating at up to 7200 MT/s—built on the latest 1b and 1c DRAM process nodes.

These ICs, positioned under the KLEVV brand, underscore a key shift in enterprise memory: process node advancement is no longer just about manufacturing yields—it’s about enabling bandwidth, power efficiency, and form factor innovation across AI servers, edge devices, and memory-bound workloads.

Understanding 1b and 1c: Not Just Smaller, But Smarter

The terms 1bnm (1b) and 1cnm (1c) refer to successive DRAM process nodes within the sub-20nm “1x” class. They don’t represent specific nanometer sizes, but rather a generational leap in:

- Cell density (enabling 32Gb+ chips)

- Power efficiency (often operating at or below 1.1V)

- Speed ceilings (DDR5-7200+ and LPDDR5X-8533+)

- Yield and thermal behavior, enabled by EUV lithography and design refinements

These nodes are essential in delivering the performance and form factors required for next-gen AI platforms, especially for large language models (LLMs), data-intensive inference, and memory bandwidth-constrained workloads.

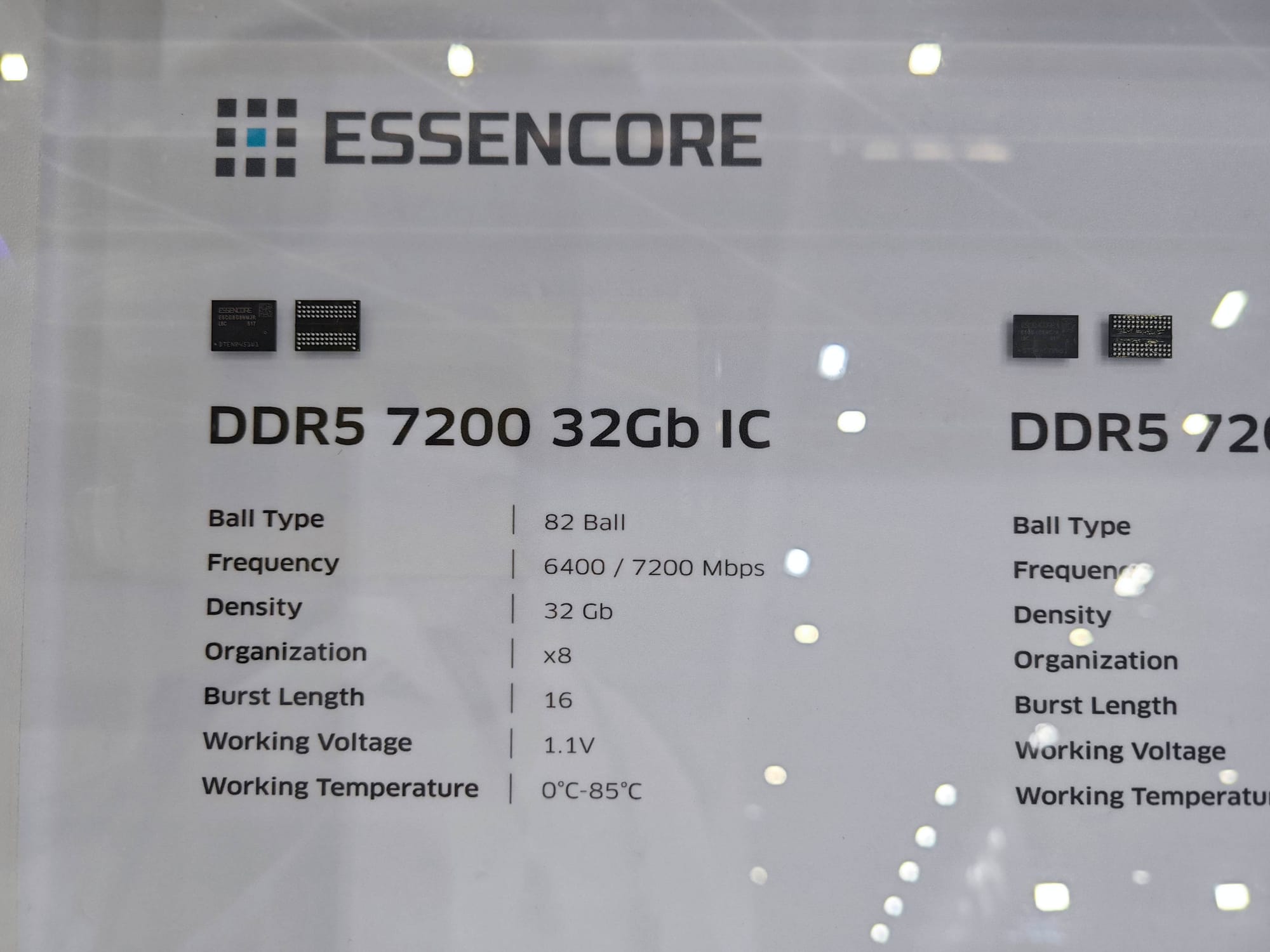

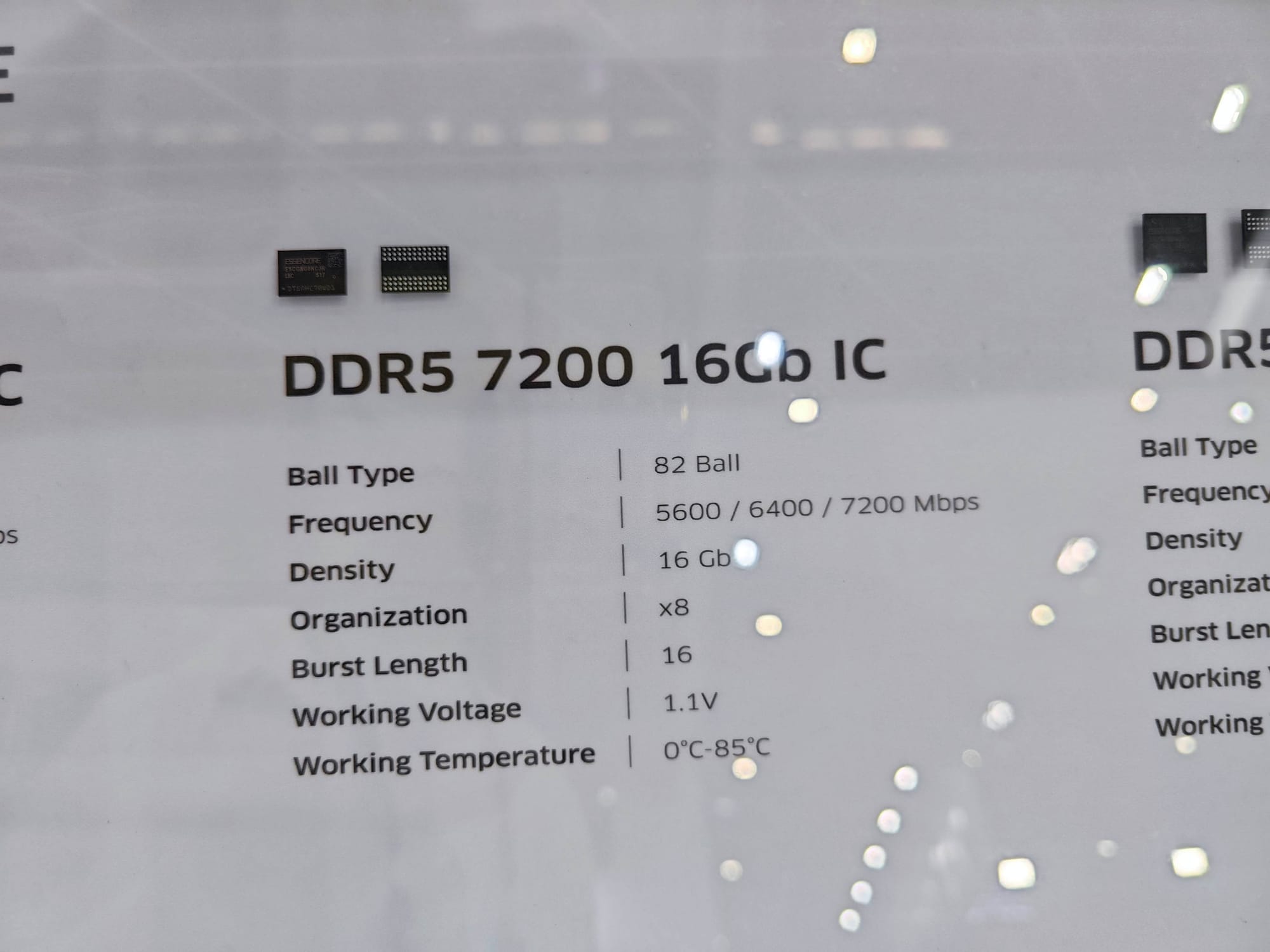

Essencore’s Showcase: DDR5 7200 ICs for the AI Era

Essencore exhibited two critical DRAM ICs:

1b nm 32Gb DDR5-7200 and 1c nm 16Gb DDR5-7200

🔹 DDR5 7200 32Gb IC

- Frequency: 6400 / 7200 Mbps

- Density: 32 Gb

- Voltage: 1.1V

- Use case: High-capacity DIMMs (64–128GB), optimized for AI servers and next-gen platforms like CAMM2 and MRDIMM

🔹 DDR5 7200 16Gb IC

- Frequency Range: 5600–7200 Mbps

- Density: 16 Gb

- Target: High-performance desktops, laptops, and embedded AI workloads

These ICs—built on 1b and 1c process nodes—enable unprecedented density and stability at high frequencies, supporting AI workloads with higher throughput and reduced thermal overhead.

Why This Matters to AI Infrastructure Leaders

DRAM advancements like 1b and 1c don’t show up in marketing headlines—but they enable critical performance gains:

1. Higher Throughput

With DDR5-7200+ modules, AI models experience:

- Faster training times

- Reduced latency for inference

- Higher utilization of CPU/GPU pipelines due to fewer memory stalls

2. Greater Capacity per Module

32Gb dies allow for:

- Fewer DIMMs to reach 128–256GB memory footprints

- Improved airflow, lower power draw, and easier board layouts

3. Power Efficiency at Scale

Lower operating voltages help:

- Reduce total system power usage (especially in multi-socket server deployments)

- Improve battery life and thermal margins in edge inference systems

Do Procurement Teams Actually Care?

Not always—at least, not explicitly.

Most CTOs or purchasing leads don’t ask for “1b” or “1c” DRAM directly. Instead, they care about:

- DDR5 performance (7200 MT/s and up)

- Module capacity (64GB, 128GB)

- Compatibility and power efficiency

- Thermals and long-term reliability

The reality is that 1b/1c nodes enable all of the above. While DRAM node details rarely make it into spec sheets, vendors like Essencore/KLEVV use these nodes to meet enterprise performance guarantees—especially in memory-bound applications like AI inference, financial modeling, and 3D rendering.

In this sense, 1b/1c process nodes are like an invisible infrastructure layer—pushing forward what AI systems can do, without demanding attention.

Final Takeaway

For CTOs and infrastructure strategists, understanding memory evolution is no longer optional. As DRAM moves into the DDR5-8000+, LPDDR5X-9600, and HBM3E era, the process node behind each IC becomes a silent driver of AI platform performance.

Essencore’s deployment of 1b/1c DRAM ICs signals not just readiness—but leadership in enabling next-generation memory systems that support the compute ambitions of tomorrow.