NVLink Fusion: NVIDIA’s Strategic Shift Toward Open AI Infrastructure

NVIDIA announced NVLink Fusion—a significant architectural and ecosystem initiative designed to open up its high-speed NVLink interconnect to third-party silicon providers.

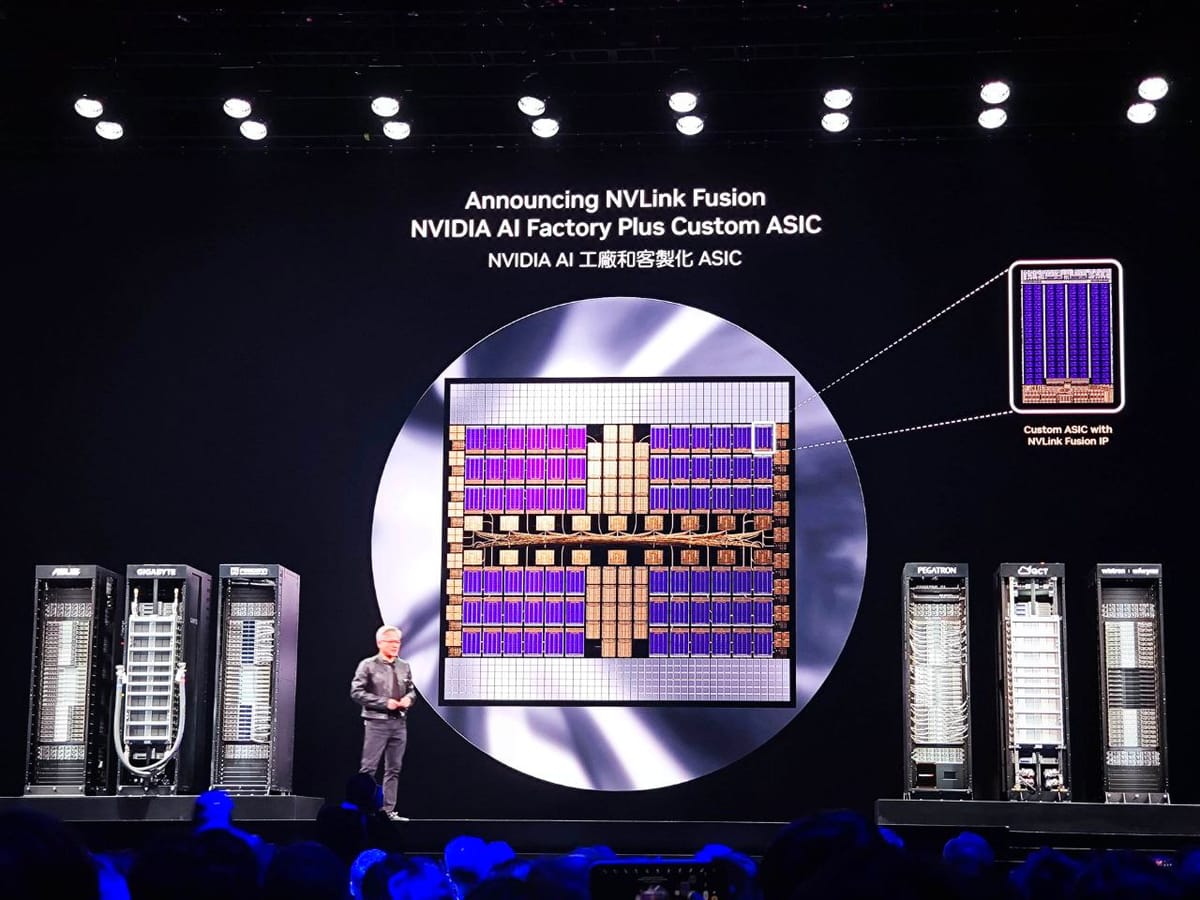

At COMPUTEX 2025, NVIDIA announced NVLink Fusion—a significant architectural and ecosystem initiative designed to open up its high-speed NVLink interconnect to third-party silicon providers. This move represents a notable shift in NVIDIA’s strategy, enabling the creation of semi-custom AI infrastructure where external CPUs, AI accelerators, and domain-specific chips can interoperate directly with NVIDIA’s GPU and networking platforms.

As AI workloads become increasingly specialized and heterogeneous, NVLink Fusion is positioned as the connective tissue that enables system-wide composability—without compromising bandwidth, latency, or memory coherence.

Background: The Challenge of Scaling Heterogeneous AI Systems

Modern data centers are no longer homogenous compute environments. Enterprises and hyperscalers are increasingly investing in custom silicon—whether for large language models, vision inference, simulation, or retrieval workloads. Integrating these accelerators into existing infrastructure, especially when seeking to co-locate with NVIDIA GPUs, has historically been difficult due to limitations in interconnect standards and architectural cohesion.

Previously, NVIDIA’s NVLink—the high-bandwidth, low-latency interconnect that powers systems like DGX and HGX—was used exclusively for intra-NVIDIA connections (e.g., GPU-GPU or GPU-CPU using NVIDIA Grace).

With NVLink Fusion, this boundary is being formally removed.

What is NVLink Fusion?

NVLink Fusion is a new framework that allows third-party chipmakers to integrate directly with NVIDIA’s NVLink architecture through a set of technologies and partnerships. Key components include:

- NVLink IP and chiplets that can be embedded into external silicon.

- Reference integration models for connecting external compute devices (e.g., CPUs, TPUs, ASICs) into NVIDIA's GPU system fabric.

- Support for custom compute boards that combine NVIDIA components with third-party accelerators.

The goal is to allow system designers to build customized AI systems that still benefit from NVIDIA’s NVLink bandwidth, GPU memory sharing, and software stack compatibility.

How Does Integration Work?

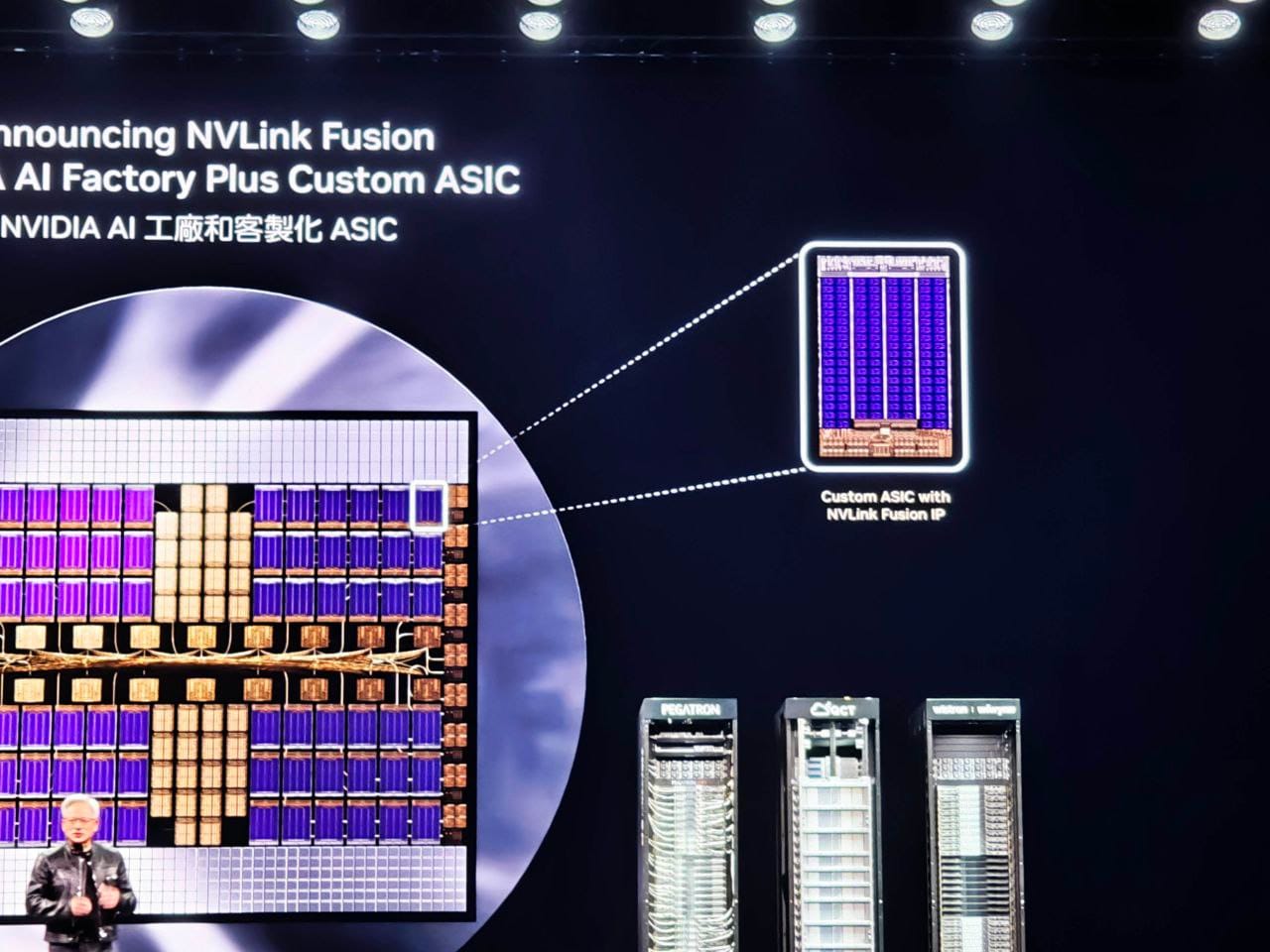

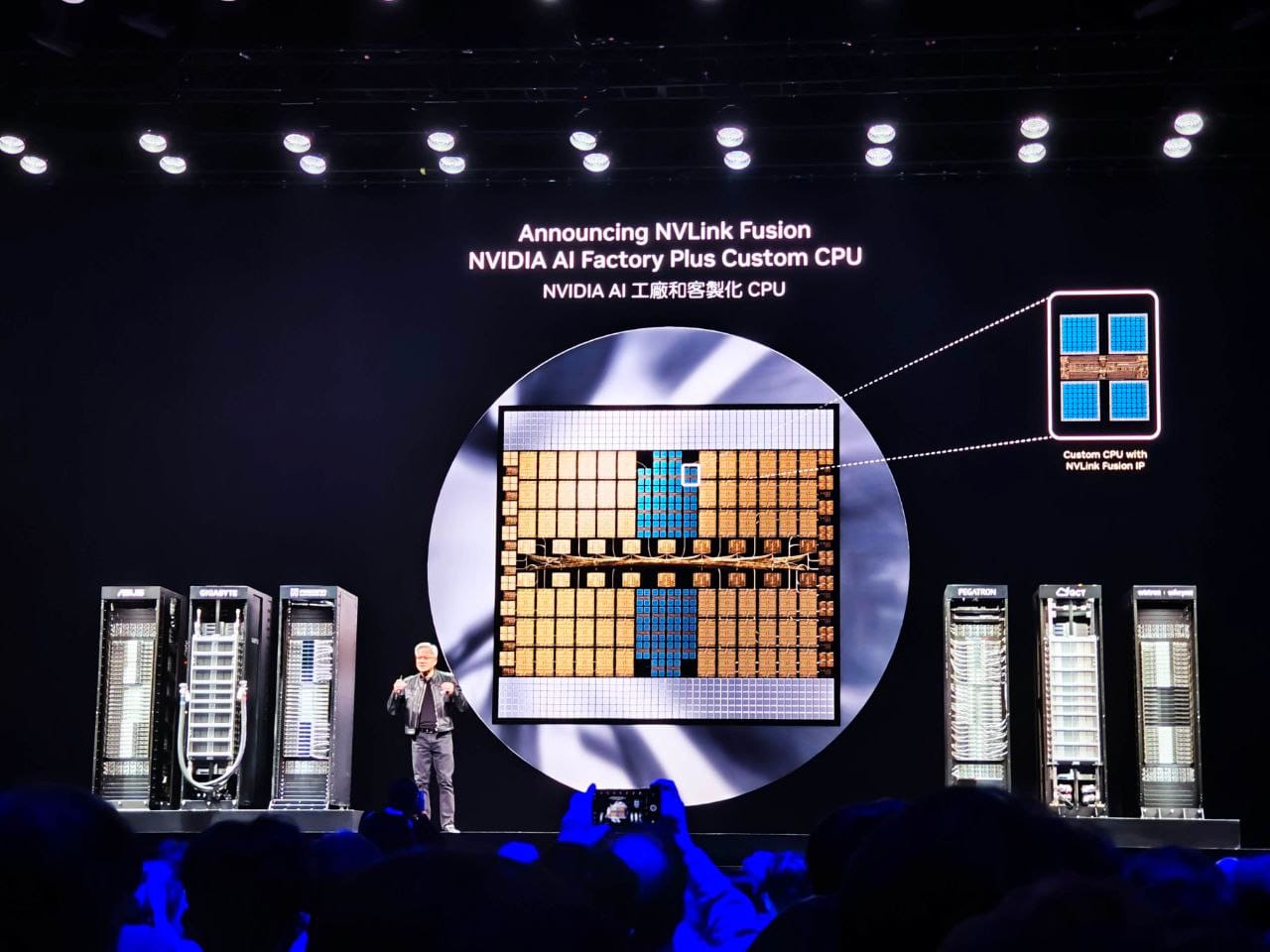

Third-party silicon vendors can integrate NVLink through:

- NVLink Chiplets: Modular physical IP that attaches to custom silicon, functioning like a switch to interface with NVIDIA’s GPUs or NVLink Switches.

- Licensable IP Blocks: These provide direct access to NVLink protocols and electrical interfaces that can be implemented in new ASICs or CPUs.

- System-Level Architecture: NVIDIA provides reference designs to ensure full-stack integration with Spectrum-X networking, Blackwell GPUs, and the CUDA/NCCL software ecosystem.

This approach makes it possible to co-package third-party chips with NVIDIA’s compute units at rack scale, enabling coherent, high-throughput system topologies.

Who Is Participating?

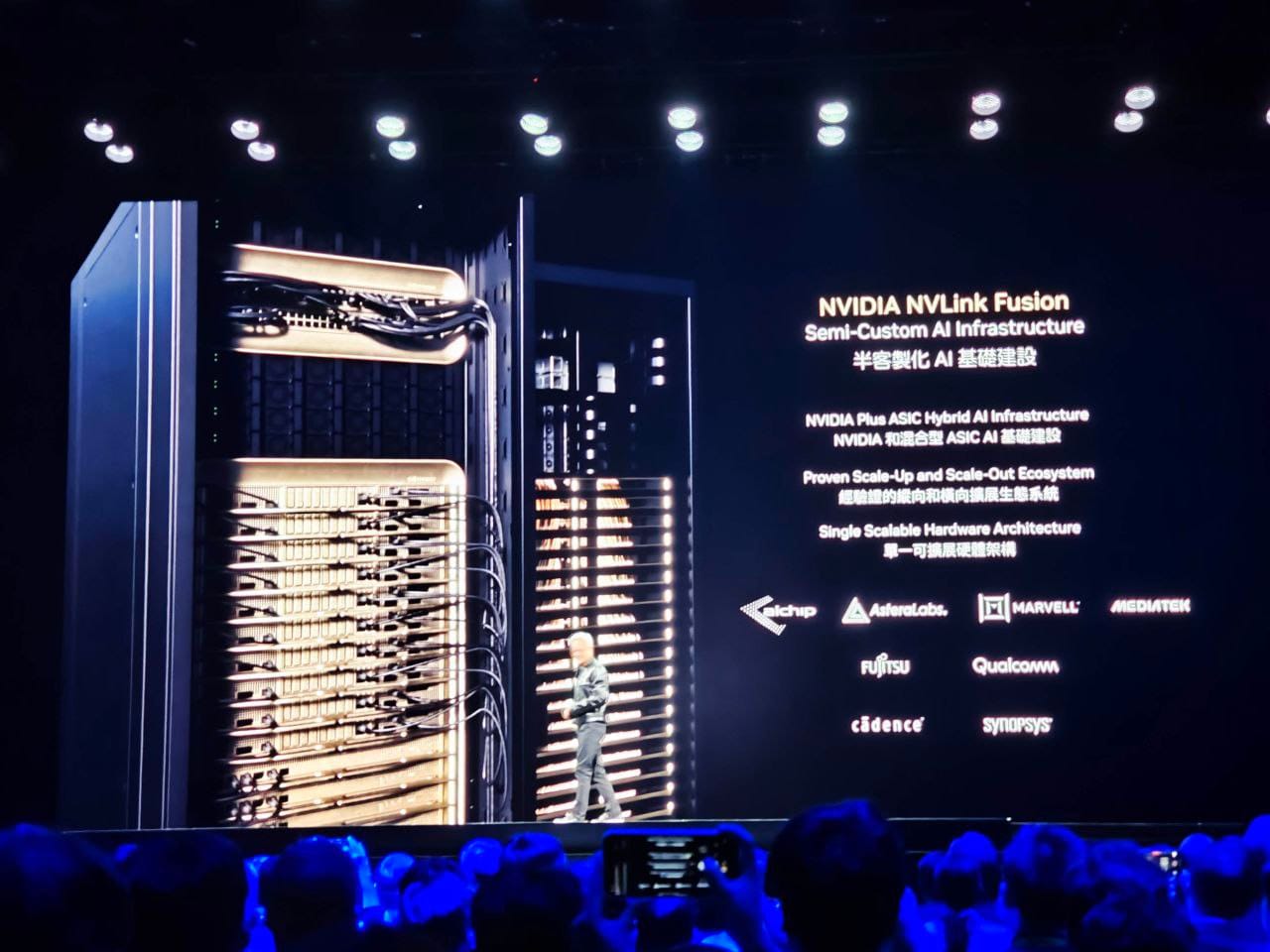

NVIDIA highlighted several initial ecosystem participants:

- Qualcomm and Fujitsu are working on integrating their CPUs into NVLink-compatible systems.

- MediaTek, Marvell, and Alchip Technologies are exploring NVLink-connected ASICs.

- Synopsys and Cadence are enabling design tooling and IP availability for broader industry adoption.

- Astera Labs is contributing to interconnect and memory infrastructure.

By working with these partners, NVIDIA ensures that NVLink Fusion is not just a concept—but a platform play.

Strategic Implications

This initiative represents a fundamental evolution in how NVIDIA positions its hardware ecosystem:

- From closed systems to modular platforms: NVIDIA is enabling others to build their own compute elements and still plug into NVIDIA's ecosystem.

- From monolithic design to composable architecture: Workloads can now dictate compute architecture, not the other way around.

- From silicon vendor to infrastructure enabler: NVIDIA is effectively allowing others to co-develop next-generation AI systems around its interconnect fabric.

For hyperscalers and system integrators, this creates new opportunities to differentiate their stacks—without abandoning the performance and tooling advantages of NVIDIA’s platform.

Conclusion: A Foundation for Semi-Custom AI Infrastructure

NVLink Fusion is more than a hardware announcement—it’s NVIDIA’s formal invitation to the AI industry to co-develop infrastructure at the silicon and system level. As enterprises demand ever more tailored solutions for training, inference, and simulation, the ability to integrate custom compute blocks with NVIDIA’s GPUs natively—and at NVLink speeds—is a transformative capability.

By opening its interconnect fabric, NVIDIA is positioning NVLink not just as a feature of its products, but as the foundational fabric of the AI data center.

Whether it becomes the PCIe of AI infrastructure remains to be seen. But with NVLink Fusion, NVIDIA has taken a clear and deliberate step in that direction.